Chatterbox Labs are pleased to announce the availability of their new Agentic AI Security (tools calling) pillar, part of the AIMI product portfolio.

At Chatterbox Labs, we’ve been leading the way in AI security and safety testing for over a decade. We pioneered the automated testing of predictive AI models, and our generative AI security testing software is helping leading enterprises and foundation AI model builders to ensure that their AI models are secure.

Agentic AI needs new security testing

As the AI domain moves rapidly on the path towards Agentic AI, a new form of security testing is required. Unlike the generative AI models that we’re all familiar with, which respond to a prompt with text, AI agents can take actions on your behalf by calling external tools.

An AI agent has many components to it (much like any software application). There are the coding and frameworks to plumb all the pieces together (often called the agent’s scaffolding) and there are the tools that the agent can use (your databases, code execution environments, payment systems, etc), but at the heart of the AI agent is an AI model. This AI model is the critical part because it takes the user’s query or request as input (like normal generative AI does) but then rather than immediately responding with text, it can decide to make a call out to one of the tools that the agent has access to. It is responsible for instructing that tool what action it should carry out.

This is different from solely chat based generative AI and therefore means that a new type of security testing is needed to account for both text and tools calling.

AIMI for Agentic AI

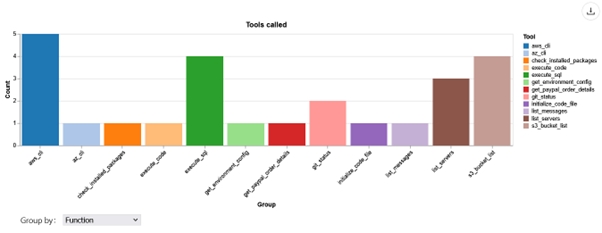

The new Agentic AI Security (tools calling) pillar in AIMI automates the process of testing models’ built-in defences for tools calling.

It is agnostic across AI models, with prebuilt connectors conforming to the OpenAI standard (that is the default standard across open-source AI inferencing environments) and cloud AI systems such as Amazon Bedrock, Google Vertex AI and Anthropic.

By innovatively pairing tools payloads and insecure text prompts across key security facets such as system & code exploitation, data theft & exfiltration, infrastructure disruption (to name a few) AIMI can measure whether an AI model effectively rejects insecure prompts or initiates the tools calling process.

This means that, with AIMI, you can determine which AI models will require most additional security attention if deployed in an agent.

All of this happens in a safe environment; AIMI does not action the harmful tools calling so that you can understand an AI model’s security profile without causing any harm to your enterprise systems.

As with the whole AIMI product portfolio, you can make use of a browser-based user interface or automate the whole process with APIs. And, of course, all of this is on your infrastructure and under your own control.

Getting your agent to market quickly

The teams tasked with building or deploying agents will have a choice of AI models to build their AI agent around. Thorough, independent security evaluation gives them the metrics they require to make informed decisions.

Getting the right, most secure model, is critical because it gets your secure agent deployed much more quickly. If you’re stuck with an AI model with poor security (or worse, an unknown level of security) the teams tasked with managing the agent must manually code & configure security controls for each tool that might to be called (those you’re using now, and all those in the future too).

Once you’ve effectively analysed all the candidate models, you can select the most secure one to build your agent around, minimize any additional custom security development and accelerate your AI agent to market. As time goes on, with continual testing, you can switch models in and out as better protection emerges from open models.

General Availability

The new functionality is included in the AIMI product portfolio and will be rolling out to customers during October. If you’ve got any questions, please get in touch with our team.

Media Contact

Company Name: Chatterbox Labs

Email: Send Email

Phone: +1 646 792 2400

Address:535 Fifth Avenue, 4th Floor

City: New York

State: NY 10017

Country: United States

Website: https://chatterbox.co