Backed by a New Partner Ecosystem, University Collaborations in the U.S., U.K., and India, and a Pledge from Private Foundations

The Responsible AI Institute (RAI Institute) is taking bold action to reshape and accelerate the future of responsible AI adoption. In response to rapid regulatory shifts, corporate FOMO, and the rise of agentic AI, RAI Institute is expanding beyond policy advocacy to deploy AI-driven tools, agentic AI services, and new AI verification, badging, and benchmarking programs. Backed by a new partner ecosystem, university collaborations in the U.S., U.K., and India, and a pledge from private foundations, RAI Institute is equipping organizations to confidently adopt and govern multi-vendor agent ecosystems.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250219659651/en/

(Graphic: Business Wire)

THE AI LANDSCAPE HAS CHANGED — AND RAI INSTITUTE IS MOVING FROM POLICY TO IMPACT

Global AI policy and adoption are at an inflection point. AI adoption is accelerating, but trust and governance have not kept pace. Regulatory rollbacks, such as the revocation of the U.S. AI Executive Order and the withdrawal of the EU’s AI Liability Directive, signal a shift away from oversight, pushing businesses to adopt AI without sufficient safety frameworks.

- 51% of companies have already deployed AI agents, with another 78% planning implementation soon (LangChain, 2024).

- 42% of workers say accuracy and reliability are top priorities for improving agentic AI tools (Pegasystems, 2025).

- 67% of IT decision-makers across the U.S., U.K., France, Germany, Australia, and Singapore report adopting AI despite reliability concerns, driven by FOMO (fear of missing out) (ABBYY Survey, 2025).

At the same time, AI vendors like OpenAI and Microsoft are urging businesses to "accept imperfection," a stance that directly contradicts the principles of responsible AI governance. AI-driven automation is already reshaping the workforce, yet most organizations lack structured transition plans, leading to job displacement, skill gaps, and growing concerns over AI’s economic impact.

The RAI Institute sees this moment as a call to action, going beyond policy frameworks. It's about creating concrete, operational tools, sharing real-world experiences, and learning from real-world member experiences to safeguard AI deployment at scale.

STRATEGIC SHIFT: FROM POLICY TO PRACTICE

Following a six month review of its operations and strategy, RAI Institute is realigning its mission around three core pillars:

1. EMBRACING HUMAN-LED AI AGENTS TO ACCELERATE RAI ENABLEMENT

The Institute will lead by example, integrating AI-powered processes across its operations as “customer zero.” From AI-driven market intelligence to verification and assessment acceleration, RAI Institute is actively testing the power and exposing the limitations of agentic AI, ensuring it is effective, safe, and accountable in real-world applications.

2. SHIFTING FROM AI POLICY TO AI OPERATIONALIZATION

RAI Institute is shifting from policy to action by deploying AI-driven risk management tools and real-time monitoring agents to help companies automate evaluation and 3rd party verification against frameworks like NIST RMF, ISO 42001, and the EU AI Act. Additionally, RAI Institute is partnering with leading universities and research labs in the U.S., U.K., and India to co-develop, stress-test, and pilot responsible agentic AI, ensuring enterprises can measure agent performance, alignment, and unintended risks in real-world scenarios.

3. LAUNCHING THE RAISE AI PATHWAYS PROGRAM

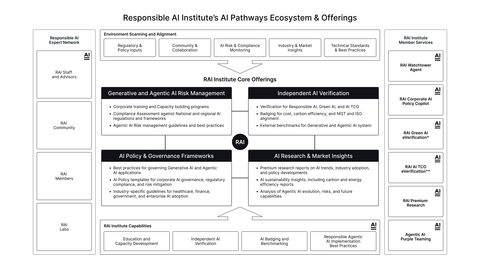

RAI Institute is accelerating responsible AI adoption with the RAISE AI Pathways Program, delivering a suite of new human-augmented AI agent-powered insights, assessments, and benchmarking to help businesses evaluate AI maturity, compliance, and readiness for agentic AI ecosystems. This program will leverage collaborations with industry leaders, including the Green Software Foundation and FinOps Foundation and be backed by a matching grant pledge from private foundations, with further funding details to be announced later this year.

"The rise of agentic AI isn’t on the horizon — it’s already here, and we are shifting from advocacy to action to meet member needs," said Jeff Easley, General Manager, Responsible AI Institute. "AI is evolving from experimental pilots to large-scale deployment at an unprecedented pace. Our members don’t just need policy recommendations — they need AI-powered risk management, independent verification, and benchmarking tools to help deploy AI responsibly without stifling innovation."

RAISE AI PATHWAYS: LEVERAGING HUMAN-LED AGENTIC AI FOR ACCELERATED IMPACT

Beginning in March, RAI Institute will begin a phased launch of its six AI Pathways Agents, developed in collaboration with leading cloud and AI tool vendors and university AI labs in the U.S., U.K., and India. These agents are designed to help enterprises access external tools to independently evaluate, build, deploy, and manage responsible agentic AI systems with safety, trust, and accountability.

The phased rollout will ensure real-world testing, enterprise integration, and continuous refinement, enabling organizations to adopt AI-powered governance and risk management solutions at scale. Early access will be granted to select partners and current members, with broader availability expanding throughout the year. Sign up now to join the early access program!

Introducing the RAI AI Pathways Agent Suite:

- RAI Watchtower Agent – Real-time AI risk monitoring to detect compliance gaps, model drift, and security vulnerabilities before they escalate.

- RAI Corporate AI Policy Copilot – An intelligent policy assistant that helps businesses develop, implement, and maintain AI policies aligned with global policy and standards.

- RAI Green AI eVerification – A benchmarking program for measuring and optimizing AI’s carbon footprint, in collaboration with the Green Software Foundation.

- RAI AI TCO eVerification – Independent Total Cost of Ownership verification for AI investments, in collaboration with the FinOps Foundation.

- RAI Agentic AI Purple Teaming – Proactive adversarial testing and defense strategies using industry standards and curated benchmarking data. This AI security agent identifies vulnerabilities, stress-tests AI systems, and mitigates risks such as hallucinations, attacks, bias, and model drift.

- RAI Premium Research – Access exclusive, in-depth analysis on responsible AI implementation, governance, and risk management. Stay ahead of emerging risks, regulatory changes, and AI best practices.

MOVING FORWARD: BUILDING A RESPONSIBLE AI FUTURE

The Responsible AI Institute is not merely adapting to AI’s rapid evolution — it is leading the charge in defining how AI should be integrated responsibly. Over the next few months, RAI Institute will introduce:

- Scholarships, hackathons, and long-term internships funded by private foundations.

- A new global advisory board focused on Agentic AI regulations, safety, and innovation.

- Upskilling programs to equip organizations with the tools to navigate the next era of AI governance.

JOIN THE MOVEMENT: THE TIME FOR RESPONSIBLE AI IS NOW!

Join us in shaping the future of responsible AI. Sign up for early access to the RAI AI Agents and RAISE Pathways Programs.

About the Responsible AI Institute

Since 2016, the Responsible AI Institute has been at the forefront of advancing responsible AI adoption across industries. As a non-profit organization, RAI Institute partners with policymakers, industry leaders, and technology providers to develop responsible AI benchmarks, governance frameworks, and best practices. With the launch of RAISE Pathways, RAI Institute equips organizations with expert-led training, real-time assessments, and implementation toolkits to strengthen AI governance, enhance transparency, and drive innovation at scale.

Members include leading companies such as Boston Consulting Group, AMD, KPMG, Chevron, Ally, Mastercard and many others dedicated to bringing responsible AI to all industry sectors.

Connect with RAI Institute

View source version on businesswire.com: https://www.businesswire.com/news/home/20250219659651/en/

Contacts

Media Contact

Nicole McCaffrey

Head of Strategy & Marketing, RAI Institute

nicole@responsible.ai

+1 (440) 785-3588